Understanding subjective and objective assessments, and the difference between the two, is central to designing effective exams. Educators need a strong understanding of both types to accurately assess student learning. Each of these styles has specific attributes that make them better suited for certain subjects and learning outcomes. Knowing when to use subjective instead of objective assessments, and vice versa, as well as identifying resources that can help increase the overall fairness of exams, is essential to educators’ efforts in accurately gauging the academic progress of their students.

Let’s take a closer look at subjective and objective assessments, how they are measured, and the ways in which they can be used effectively to evaluate student knowledge.

What is a subjective assessment?According to EnglishPost.org, “Subjective tests aim to assess areas of students’ performance that are complex and qualitative, using questioning that may have more than one correct answer or more ways to express it.” Subjective assessments are popular because they typically take less time for teachers to develop, and they offer students the ability to be creative or critical in constructing their answers. Some examples of subjective assessment questions include asking students to:

- Respond with short answers

- Craft their answers in the form of an essay

- Define a term, concept, or significant event

- Respond with a critically thought-out or factually-supported opinion

- Respond to a theoretical scenario

Subjective assessments are excellent for subjects like writing, reading, art/art history, philosophy, political science, or literature. More specifically, any subject that encourages debate, critical thinking, interpretation of art forms or policies, or applying specific knowledge to real-world scenarios is well-suited for subjective assessment. These include long-form essays, debates, interpretations, definitions of terms, concepts, and events as well as responding to theoretical scenarios, defending opinions, and other responses.

What is an objective assessment?Objective assessment, on the other hand, is far more exact and subsequently less open to the students’ interpretation of concepts or theories. Edulytic defines objective assessment as “a way of examining in which questions asked has [sic] a single correct answer.” Mathematics, geography, science, engineering, and computer science are all subjects that rely heavily on objective exams. Some of the most common item types for this style of assessment include:

- Multiple-choice

- True / false

- Matching

- Fill in the blank

- Assertion and reason

Assessments measure and evaluate student knowledge; to that end, grading is involved with doing so. Just as subjective and objective assessment differ, so do ways in which educators measure them.

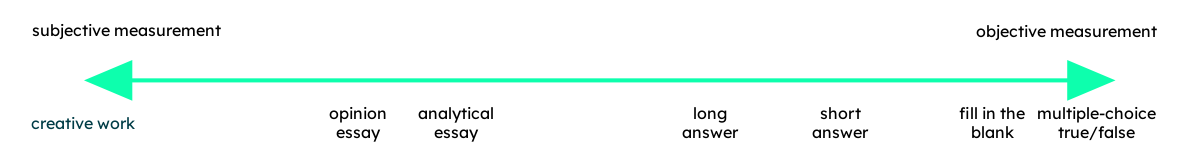

Subjective performance measurements are dependent on the observer or grader and involve interpretation. A creative work might be the most clear example for which subjective measurement might apply; while grammar and syntax, of course, are necessary to express ideas, the quality of creative work is subject to human judgment. Opinion essays are also a subjective measurement, as there is no one right answer and are evaluated based on persuasion skills; the flow of logic or writing style, in addition to the content of an answer, can influence a person marking student work.

In brief, subjective measurement involves more than one correct answer and assesses qualitative or analytic thinking.

On the other hand, objective measurement is conducted independent of opinion. One extreme example is feeding a multiple-choice exam into a Scantron machine, which provides zero feedback and simply marks an answer wrong or correct. Even when a human being grades objective assessment and provides feedback, answers are not for interpretation when it comes to objective measurement. Other examples of objective measurement include mathematics problems with one correct answer that is unquestionable and again, independent of the grader’s opinion (Jackson, retrieved 2023).

In sum, objective measurement is implicitly consistent, impartial, and usually quantifiable.

That said, measurement of assessments, whether subjective or objective, is a spectrum.

While a creative work may be graded almost entirely subjectively, a personal or opinion essay, while subjective in nature, may fall towards the middle of the spectrum. An analytical essay, for instance, can offer objective measurements like grammar, structure, primary or secondary sources, and citation. Of course, on the objective end of the spectrum are multiple-choice questions like mathematics problems. But even mathematics can fall towards the middle; for example, when students work on proofs and theorems to demonstrate logic and analytical thinking. In the case of a proof, a grader has to interpret how deeply a student understands the concept and might even grant partial credit.

What makes a subjective or objective assessment effective?The word “subjective” has often become a pejorative term when it comes to assessment and grading, while the word “objective” is elevated as a paragon of fairness. But the reality is that both subjective and objective assessments are effective ways to measure learning, when they are designed well and used appropriately.

Subjective and objective assessments are effective when they show reliability and validity.

An assessment is reliable when it consistently measures student learning. Reliability involves the correct answer every time, with no variation from student to student, making scores trustworthy; many standardized tests like those used for licensing or certification, for instance, are deemed highly reliable. In the case of subjective assessment, rubrics can provide increased reliability.

An assessment is valid when it measures what it was intended to measure. Validity accurately measures understanding, whether it is the evaluation of analytic thinking or factual knowledge.

When should you use each assessment type?You wouldn’t ask a nursing student to write an opinion essay on differential diagnosis and pharmaceutical treatment; at the same time, you wouldn’t ask graduate students of English literature to answer true/false questions about the works of Shakespeare. Providing the right kind of assessment to assess appropriate levels of knowledge and learning is critical.

The first step towards effective exam design is to consider the purpose of the assessment and uphold validity.

When an instructor wants to measure critical thinking skills, a student’s ability to come up with their own original ideas, or even how they arrived at their response, subjective assessment is the best fit. When an instructor wants to evaluate a student’s knowledge of facts, for instance, objective measurement is called for. Of course, exams can offer a variety of formats to measure both critical thinking and breadth of knowledge; many assessments benefit from the inclusion of both subjective and objective assessment questions.

Subjective assessments lend themselves to programs where students are asked to apply what they’ve learned according to specific scenarios. Any field of study that emphasizes creativity, critical thinking, or problem-solving may place a high value on the qualitative aspects of subjective assessments. These could include:

- Humanities

- Education

- Management

- Arbitration

- Design

Objective assessments are popular options for programs with curricula structured around absolutes or definite right and wrong answers; the sciences are a good example. If there are specific industry standards or best practices that professionals must follow at all times, objective assessments are an effective way to gauge students’ mastery of the requisite techniques or knowledge. Such programs might include:

- Nursing

- Engineering

- Finance

- Medical

- Law

Creating reliable and valid assessments is key to accurately measuring students’ mastery of subject matter. Educators should consider creating a blueprint for their exams to maximize the reliability and validity of their questions. It can be easier to write assessments when using an exam blueprint. Building an exam blueprint allows teachers to track how each question applies to course learning objectives and specific content sections, as well as the corresponding level of cognition being assessed.

Once educators have carefully planned out their exams, they can begin writing questions. Carnegie Mellon University’s guide to creating exams offers the following suggestions to ensure test writers are composing objective questions:

- Write questions with only one correct answer.

- Compose questions carefully to avoid grammatical clues that could inadvertently signify the correct answer.

- Make sure that the wrong answer choices are actually plausible.

- Avoid “all of the above” or “none of the above” answers as much as possible.

- Do not write overly complex questions. (Avoid double negatives, idioms, etc.)

- Write questions that assess only a single idea or concept.

Subjectivity often feels like a “bad word” in the world of assessment and grading, but it is not. It just needs to be appropriate–that is, used in the right place and at the right time. In the Journal of Economic Behavior & Organization, researchers Méndez and Jahedi report, “Our results indicate that general subjective measures can effectively capture changes in both the explicit and the implicit components of the variable being measured and, therefore, that they can be better suited for the study of broadly defined concepts than objective measures.” Subjective assessments have a place in presenting knowledge of concepts, particularly in expressing an original opinion, thought, or discourse that does not have a singular answer.

What is “bad,” however, is bias, whether unconscious or conscious, in assessment design or grading. Bias is an unfair partiality for or against something, largely based on opinion and resistance to facts.

Subjective assessments are more vulnerable to bias and it’s important to ensure that the questions address what is supposed to be measured (upholding validity) and that any grader bias is mitigated with rubrics to bolster marking consistency (thereby upholding reliability). Other ways to mitigate bias include grading by question and not by student as well as employing name-blind grading.

How to use software like Gradescope or ExamSoft to help improve the reliability and validity of your examsSubjective and objective assessment efficacy is influenced by reliability, validity, and bias. Wherever, whenever possible, it is important to bolster reliability (consistency) and validity (accuracy) while reducing bias (unfair partiality). While reliability and validity are upheld during the design and execution of assessments, ensuring that questions align with learning expectations and course content and are fair, bias can interfere with the grading process.

One important, and frequently overlooked, aspect of creating reliable and valid assessments is the manner in which those assessments are scored by removing bias. How can teachers ensure that essay or short-answer questions are all evaluated in the same manner, especially when they are responsible for scoring a substantial number of exams?

- A rubric that lists the specific requirements needed to master the assignment, helps educators provide clear and concise expectations to students, stay focused on whether those requirements have been met, and then communicate how well they were met. Using rubrics also increases consistency and decreases time spent grading. (upholds reliability, mitigates bias)

- Name-blind grading is a key component to unbiased grading; by removing the affiliation of the student’s name to the assessment, any question of prejudice is removed. It can be enabled in grading software or via folding down the corner of pages with names on them. (mitigates bias)

- Grading by question instead of by student—grading all of one question first before moving on to the others—makes sure you’re grading to the same standard and not influenced by answers to a previous question (Aldrich, 2017). (upholds reliability, mitigates bias)

- Student data insights can transform grading into learning. By conducting item analysis or, in other words, formally examining student responses and patterns, instructors can pinpoint whether or not assessments are accurately assessing student knowledge. Item analysis is a way for instructors to receive feedback on their instruction and makes learning visible. (upholds validity)

- Offer a variety of assessment formats to include different learning styles and measure different components of learning. Objective assessments like multiple-choice exams can assess a large breadth of knowledge in a short amount of time. Subjective assessments like short- and long-answer questions can test whether or not students have a deep conceptual understanding of subjects by asking students to explain their approach or thinking. Using a combination of formats within the same exam can also bolster reliability and validity. (upholds reliability, upholds validity)

- And finally, consider eliminating grading on a curve (Calsamiglia & Loviglio, 2019). When students are graded on a curve, the act of adjusting student grades so that they’re relative to the grades of their peers, there is an implicit message that students compete with each other—including those who might be cheating. According to research, “moving away from curving sets the expectation that all students have the opportunity to achieve the highest possible grade” (Schinske & Tanner, 2014). (upholds reliability, upholds validity, mitigates bias)

Using assessment tools offer the following benefits for educators:

- Electronically link rubrics to learning objectives and outcomes or accreditation standards.

- Generate comprehensive reports on student or class performance.

- Share assessment data with students to improve self-assessment.

- Gain a more complete understanding of student performance, no matter the evaluation method.

Ultimately, employing rubric and assessment software tools like ExamSoft and Gradescope gives both instructors and students a clearer picture of exam performance as it pertains to specific assignments or learning outcomes. This knowledge is instrumental to educators’ attempt to improve teaching methods, exam creation, grading—and students’ ability to refine their study habits.

Creating reliable and valid assessments with unbiased measurement will always be an important aspect of an educator’s job. Using all the tools at their disposal is the most effective way to ensure that all assessments—whether subjective or objective— accurately measure what students have learned.